Current Projects

robot nurse helper

This is a project for developing a companion robot to help nurses in a pediatric ward. There is a shortage of nurses in Taiwan, due to the change of population structure caused by sub-replacement fertility. Our goal is to enable a social to take some nurse tasks to reduce the working load of human nurses. Our first task is to monitor pediatric patients’ emotion and their levels of painfulness. Introduction slides (Link) Code is available on my GitHub webpage (Link).

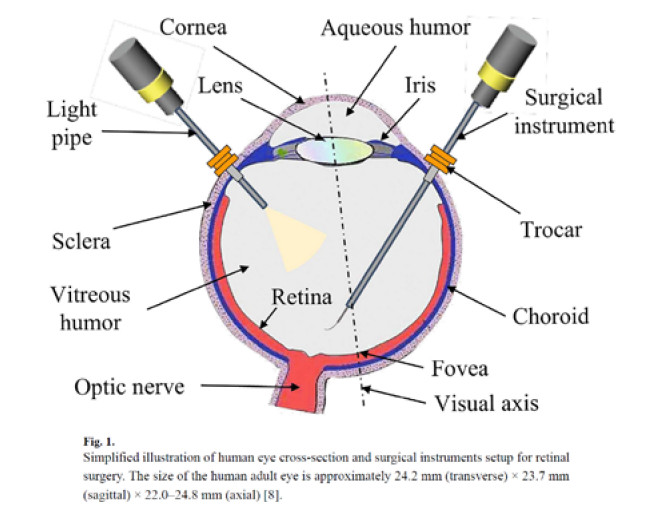

Depth estimation in an eye ball

For intraocular microsurgery, robotic assistance is a cutting-edge research field because it is promising for expanding human capabilities and improving the safety and efficiency of the intricate surgery process. Because depth is critical for intraocular microsurgery, in this project we want to estimate the depth of the medical devices in an eye ball. We want to develop a method which can warm the operator if the device is too close to the retina.

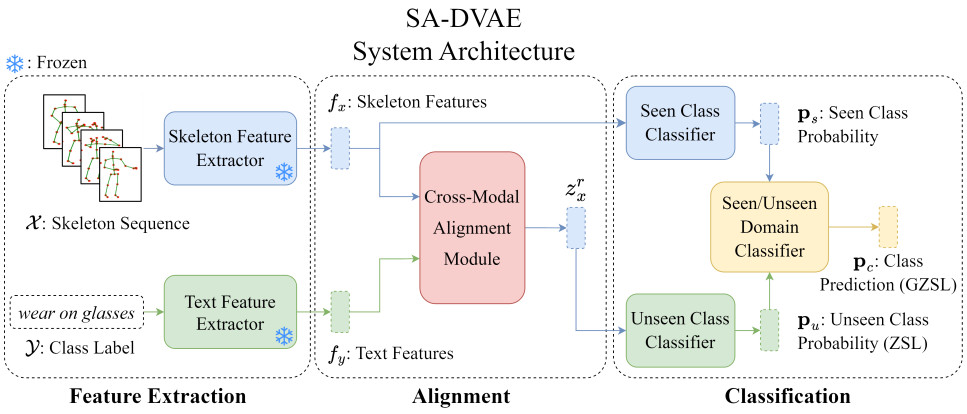

Zero-shot skeleton-based action recognition

Current research results have been published in ECCV’24 and the paper is available in my publication webpage (Link). However, there are some puzzles unsolved yet. For example, why does this method work poorly on some action datasets? How will the quality of text affect the method’s performance? If we refine the text description of the NTU RGB+D dataset, what will happen? Is the skeleton data of the NTU RGB+D dataset so noisy that the proposed method works? Given a high-quality skeleton action dataset, will the proposed method still work? To answer those questions, further research is required to be carried out.

Updates

one ECCV'24 paper accepted - August 28, 2024

Brian Liu's team won a 100k-NTD startup supporting fund from the Ministry of Education - April 22, 2024

Brian Liu's team wins the championship of the 2023 CGU Student Startup Competition - December 7, 2023